Social science: three presentations

Social science is about society, human nature and human interaction. It is an umbrella term to refer to sciences like anthropology, economics, education, linguistics, communication studies, sociology and psychology.

Anthropology teaches us about how people life, interact and something about culture. Education and didactic helps acquire new or modifying existing knowledge, behaviours, skills, values, or preferences. It helps us understand how we learn and how we can teach others. Sociology teaches us empirical investigation and critical analysis and gives insight in human social activity. Psychology is the study of the mind and behaviour and helps testers understand individuals and groups. Now how is this useful in testing? I’ll try to answer that question later. Let me first tell you about three awesome presentations on the subject of social science and testing.

Testing as a social science Cem Kaner did a talk titled “Testing as a social science first” time in 2006 (slides are here). I haven’t had the pleasure to see the talk myself but the slides drew my interest. Cem made me aware that to test effectively, our theories of error have to be theories about the mistakes people make and when and why they make them. We design and run tests in order to gain useful information about the product’s quality.

Cem Kaner did a talk titled “Testing as a social science first” time in 2006 (slides are here). I haven’t had the pleasure to see the talk myself but the slides drew my interest. Cem made me aware that to test effectively, our theories of error have to be theories about the mistakes people make and when and why they make them. We design and run tests in order to gain useful information about the product’s quality.

Testing is always a search for information. Cem talks about measurement and metrics and the dangers of using metrics wrongly to measure test completeness (new updated article on this can be found here). He argues that bad models are counter productive. Cem also touched the topic of inattentional blindness in which humans often don’t see what they don’t pay attention to. He reminded us that programs never see what they haven’t been told to pay attention to. This is especially valuable when thinking about test automation. When testing we can’t pay attention to all the conditions. The systems under test are simply to complex and there are to many factors that are variable (and uncontrollable). He concludes that thinking in terms of human issues leads us into interesting questions:

- What tests we are running and why?

- What risks are we anticipating and how?

- Why are these risks important?

- What we can do to help our clients gather the information they need?

At EuroStar 2012 in Amsterdam I track chaired two excellent talks, which inspired me to study the subject of social science and qualitative research more.

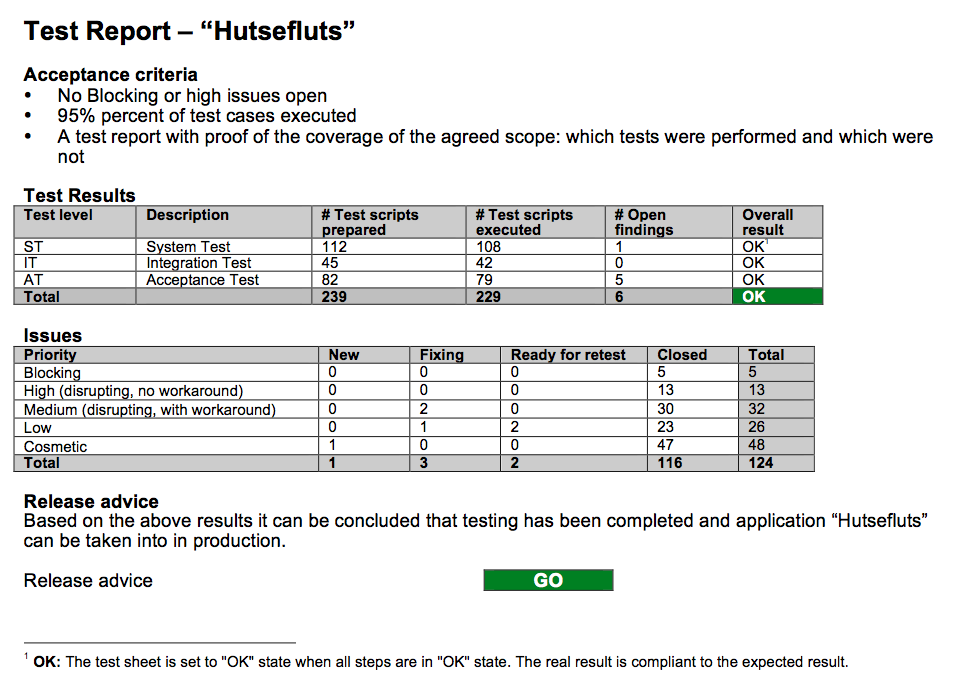

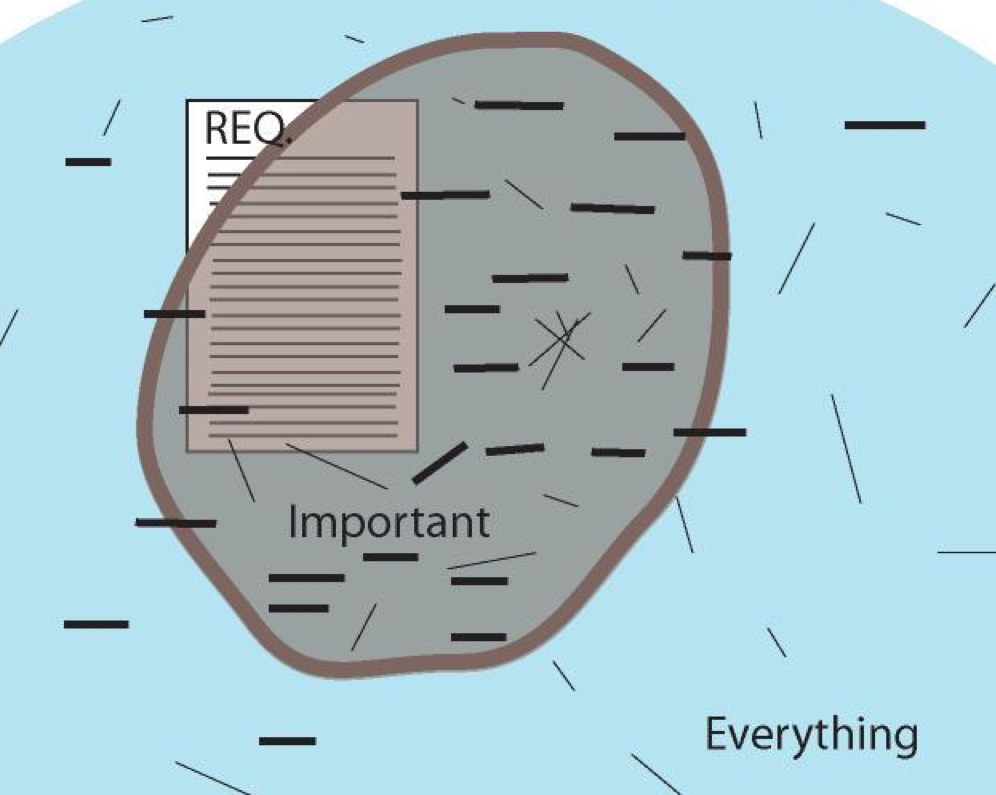

Curing Our Binary Disease Rikard Edgren talked about the getting cured from the Binary Disease (slides are here, video is here). The binary disease is when testers don’t provide useful information, because they aren’t allowed by (project) managers. They demand counting passes & fails and insist everything must be verifiable. The binary disease limits our thinking. Testers are addicted to counting passes and fails and don‘t communicate what is most important. When addicted there is no attention to serendipity moments. A model can help testers find important things, but a percentage number might not include things that are important. Therefore a coverage model is useful to get ideas but is not useful as a metric of completion. In his talk he introduces the testing potato to show that there are more things important besides written requirements. More about the potato can be found in his fabulous must read free eBook “The Little Black Book on Test Design”.

Rikard Edgren talked about the getting cured from the Binary Disease (slides are here, video is here). The binary disease is when testers don’t provide useful information, because they aren’t allowed by (project) managers. They demand counting passes & fails and insist everything must be verifiable. The binary disease limits our thinking. Testers are addicted to counting passes and fails and don‘t communicate what is most important. When addicted there is no attention to serendipity moments. A model can help testers find important things, but a percentage number might not include things that are important. Therefore a coverage model is useful to get ideas but is not useful as a metric of completion. In his talk he introduces the testing potato to show that there are more things important besides written requirements. More about the potato can be found in his fabulous must read free eBook “The Little Black Book on Test Design”.

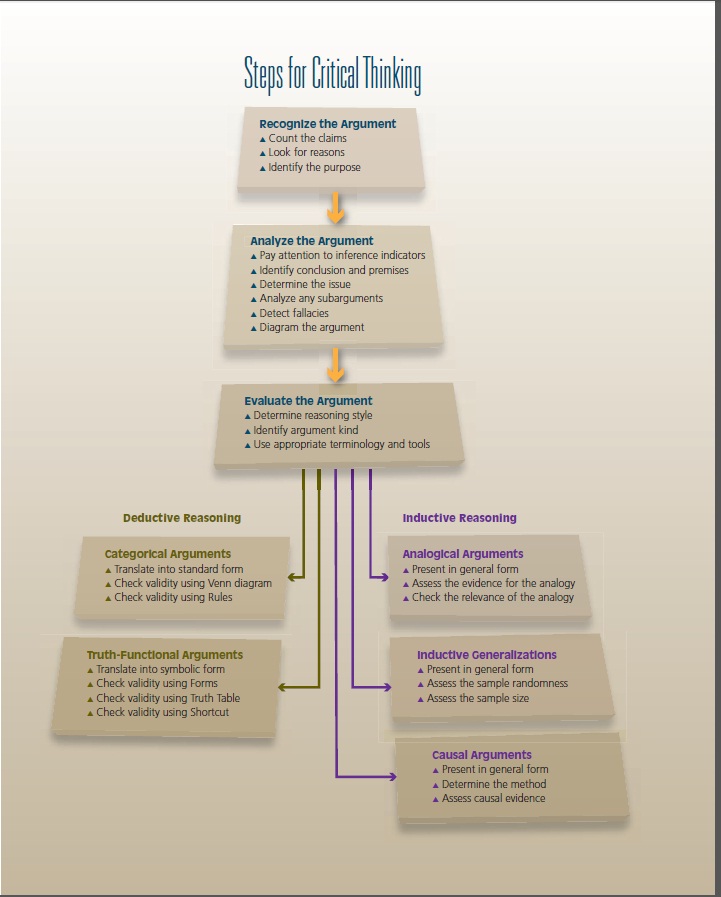

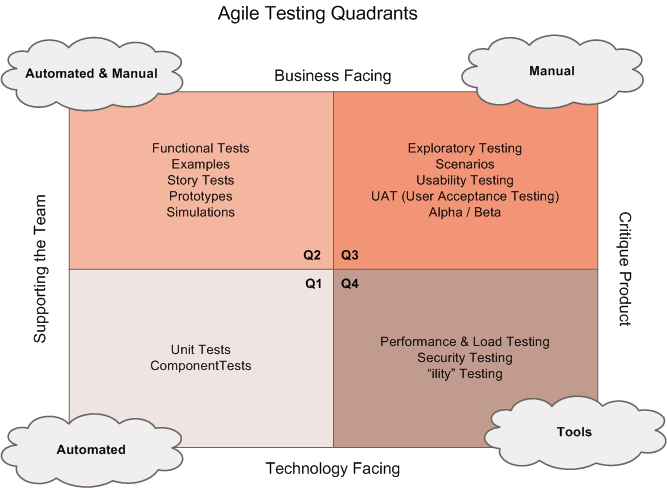

Testing Through The Qualitative Lens Michael Bolton’s (slides of the StarEast version are here) talk elaborated differences between physical and social sciences. In physics, humans are ideally irrelevant and mostly get in the way of the experiment. Use quantitative and qualitative research methods and accept high tolerance for ambiguity, context-specific results and be aware of biases while doing research. We should value “partial answers that might be useful”. You do qualitative research when you want to understand something. You do quantitative research to inform that understanding. Quantitative research put human values first; use participant observation and practice storytelling and narration. Software testing is the investigation of systems composed of people, computer programs, products, and the relationships between them. Excellent testing is more like anthropology: interdisciplinary, systems-focused, investigative, and uses storytelling.

Michael Bolton’s (slides of the StarEast version are here) talk elaborated differences between physical and social sciences. In physics, humans are ideally irrelevant and mostly get in the way of the experiment. Use quantitative and qualitative research methods and accept high tolerance for ambiguity, context-specific results and be aware of biases while doing research. We should value “partial answers that might be useful”. You do qualitative research when you want to understand something. You do quantitative research to inform that understanding. Quantitative research put human values first; use participant observation and practice storytelling and narration. Software testing is the investigation of systems composed of people, computer programs, products, and the relationships between them. Excellent testing is more like anthropology: interdisciplinary, systems-focused, investigative, and uses storytelling.

To be continued… part 5: So what can we learn from social science?

Daniel Kahneman wrote a fascinating book about how our brain works “

Daniel Kahneman wrote a fascinating book about how our brain works “ Dancing gorillas

Dancing gorillas

Why are some people so obsessed by expected results? And why is there a need to have expected results to be able to plan testing? Expected results can be very helpful, but there is much more to quality then doing some tests with an expected result. A definition that I like is by Jerry Weinberg: “Quality is value to some person”. To understand this, you might want to read

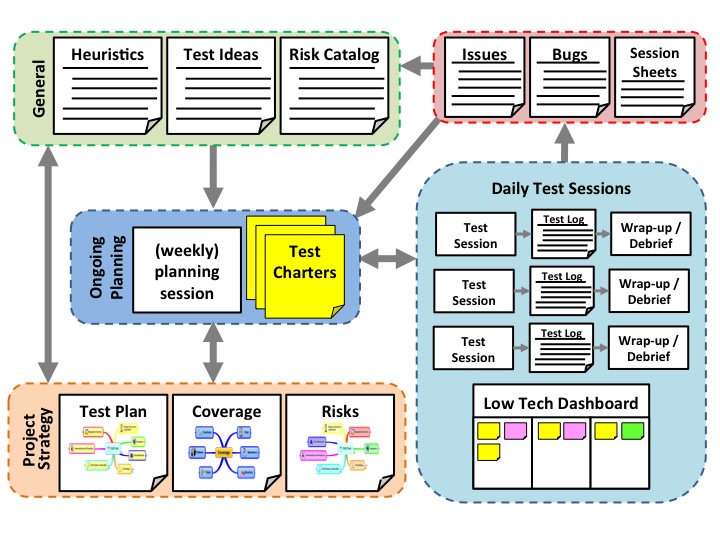

Why are some people so obsessed by expected results? And why is there a need to have expected results to be able to plan testing? Expected results can be very helpful, but there is much more to quality then doing some tests with an expected result. A definition that I like is by Jerry Weinberg: “Quality is value to some person”. To understand this, you might want to read  Using a coverage outline in a mind map or a simple spreadsheet, I keep track of what I have tested. My charters (a one to three sentence mission for a testing session) help me focus, my wrap-up and/or debriefings help me determine how good my testing was. My

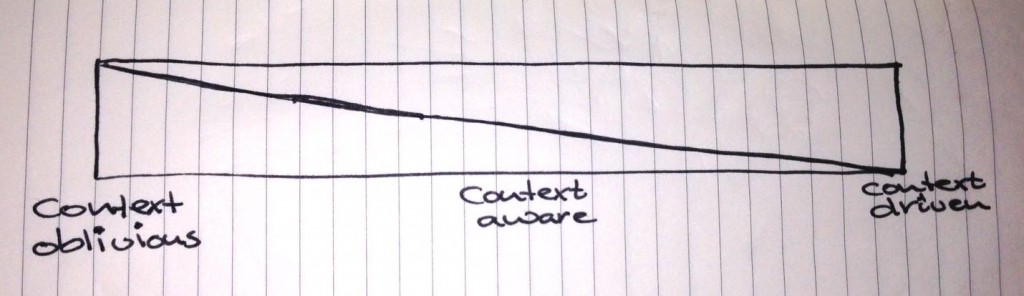

Using a coverage outline in a mind map or a simple spreadsheet, I keep track of what I have tested. My charters (a one to three sentence mission for a testing session) help me focus, my wrap-up and/or debriefings help me determine how good my testing was. My

Recent Comments