Page 3 of 8

In the October edition of Testing Trapeze my experience report “How I became a rapid software testing trainer” is published. It has been an amazing journey! When I started this journey, I thought it would be much easier. It was a lot of hard work, a struggle sometimes, but it was totally worth it! A journey in which I learned a lot about myself and the testing craft. I am looking forward teaching the next class in December in the Netherlands this year! See you there?

In the October edition of Testing Trapeze my experience report “How I became a rapid software testing trainer” is published. It has been an amazing journey! When I started this journey, I thought it would be much easier. It was a lot of hard work, a struggle sometimes, but it was totally worth it! A journey in which I learned a lot about myself and the testing craft. I am looking forward teaching the next class in December in the Netherlands this year! See you there?

Testing Trapeze is a free high quality testing magazine from Australia and New Zealand, advocating good testing practices.

Yesterday the wonderful people from Lucky Cat Tattoo put a piece of art on my arm.

Stay Hungry. Stay Foolish.

This was the ‘farewell message’ of the whole earth catalog. It was placed on the back cover of the final edition in 1974. Steve Jobs used this quote in his famous commencement speech in 2005 on Stanford University. While writing this blog post I found this article by a neuroscientist explaining what the quote means…

Hungry

Hungry points to always looking for more, striving to improve, being ambitious and eager. Everything I do, I do with passion. What keeps me moving is energy and passion. I need challenges to feel comfortable. I want to be good in almost everything I do. Not just good, but the very best. All that makes that my surroundings sometimes suffer from me because I always want to do more and do better. Fortunately, I have an above average energy level and that helps me do what I do. This video of Steve Jobs summarizes how I work.

Foolish

Foolish points to taking risks, feeling young, being daring, exploratory and adventurous. Like a child learning how the world works by trying everything. It also reminds us not always do what people expect us to do and not always take the traditional paths in life.

I’m curious. This is an important characteristic in a software tester. Richard Feynman, the Nobel Prize winner was a tester, though he was officially natural scientist. In this video, “The pleasure of finding things out” he talks about certainty, knowledge and doubt (from 47:20). Critical thinking about observations and information is important in my work! Richard Feynman never took anything for granted. He took the scientific approach and thought critical about his work. He doubted a lot and asked many questions to verify.

Because of my curiosity, I want to know everything. This has one big advantage: I want to develop and practice continuous learning. The great thing about my job is that testers get paid for learning: testing is gathering information about things that are important to stakeholders to inform decisions. I love to read and I read a lot to discover new things. I also ask for feedback on my work to develop myself continuously. Lately, experiential learning has my special attention. I wrote a column about why I like this way of learning. When it comes to learning, two great TED videos come to mind: “Schools kill creativity” and “building a school in the cloud“. These videos tell a story about how we learn and why schools (or learning in general) should change.

Think different!

Here’s to the crazy ones. The misfits. The rebels. The troublemakers. The round pegs in the square holes. The ones who see things differently. They’re not fond of rules. And they have no respect for the status quo. You can quote them, disagree with them, glorify or vilify them. About the only thing you can’t do is ignore them. Because they change things. They push the human race forward. And while some may see them as the crazy ones, we see genius. Because the people who are crazy enough to think they can change the world, are the ones who do.

Jedi

Jedi

Besides I am a huge fan of Star Wars, the Jedi sign has a deeper meaning:

“Because testing (and any engineering activity) is a solution to a very difficult problem, it must be tailored to the context of the project, and therefore testing is a human activity that requires a great deal of skill to do well. That’s why we must study it seriously. We must practice our craft. Context-driven testers strive to become the Jedi knights of testing.” (source: “The Dual Nature of Context-Driven Testing” by James Bach).

I believe that learning is not as simple as taking a class and start doing it. To become very good at something you need mentors who guide you in your journey. I strongly believe in Master-Apprentice. Young Padawans are trained to become Jedi Knights by a senior (knight or master) who learns them everything there is to know. The more they learn, the more responsibility the student gets. That is why I am happy that I have mentors who teach, coach, mentor, challenge and guide me. And that is why I am a mentor for others doing the same. Helping them to learn and become better.

Bear

Bear

In 2013 I took the awesome Problem Solving Leadership aka PSL workshop facilitated by Jerry Weinberg, Esther Derby and Johanna Rothman. This amazing six day workshop gave me many valuable insights in myself and how to be a better leader by dealing effectively with problems (or as Jerry says it: enhancing the environment so that everybody is empowered to contribute creatively to solve problems). During the social event we visited the Indian Pueblo Cultural Center which also made a big impression on me. Here I bought a talisman stone with a bear engraved. In native American beliefs the bear symbolizes power, courage, freedom, wisdom, protection and leadership (more info on bear symbolic: here, here and here).

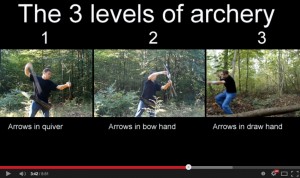

Yesterday I saw this awesome video of Lars Andersen: a new level of archery. It is going viral on the web being watched over 11 million times within 48 hours. Now watch this movie carefully…

The first time I watched this movie, I was impressed. Having tried archery several times, I know how hard it is to do. Remember Legolas from the Lord of Rings movie? I thought that was “only” a movie and his shooting speed was greatly exaggerated. But it turns out Lars Andersen is faster than Legolas. My colleague Sander send me an email telling me about the movie I just watched saying this was an excellent example of craftsmanship, something we have been discussing earlier this week. So I watched the movie again…

Also read what Lars has to say in the comments on YouTube and make sure you read his press release.

This movie is exemplar for the importance of practice and skills! This movie explains archery in a way a context-driven tester would explain his testing…

0:06 These skills have been long since been forgotten. But master archer Lars Andersen is trying to reinvent was has been lost…

Skills are the backbone of everything being done well. So in testing skills are essential too. I’ll come back to that later on. And the word reinvent triggers me as well. Every tester should reinvent his own testing. Only by going very deep, understand every single bit, practice and practice more, you will truly know how to be an excellent tester.

0:32 This is the best type of shooting and there is nothing beyond it in power or accuracy. Using this technique Larsen set several speed shooting records and he shoots more than twice as fast as his closest competitors…

Excellent testers are faster and better. Last week I heard professor Chris Verhoef speak about skills in IT and he mentioned that he has seen a factor 200 in productivity difference between excellent programmers and bad programmers (he called them “Timber Smurf” or “Knutselsmurf” in Dutch).

0:42 … being able to shoot fast is only one of the benefits of the method

Faster testing! Isn’t that what we are after?

0:55 Surprisingly the quiver turned out to be useless when it comes to moving fast. The back quiver was a Hollywood Myth…

The back quiver is a Hollywood myth. It looks cool and may look handy on first sight, since you can put a lot of arrows in it. Doesn’t this sound like certificates and document-heavy test approaches? The certificates looks good on your resume and the artifacts look convenient to help you structure your testing… But turn out to be worthless when it comes to test fast.

1:03 Why? Because modern archers do not move. They stand still firing at a target board.

I see a parallel here with old school testing: testers had a lot of time to prepare in the waterfall projects. The basic assumption was that target wasn’t moving, so it was like shooting at a target board. Although the target proved always to be moving, the testing methods are designed for target boards.

1:27 Placing the arrow left around the bow is not good while you are in motion. By placing your hand on the left side, your hand is on the wrong side of the string. So you need several movements before you can actually shoot..

Making a ton of documentation before starting to test is like several movements before you can actually test.

1:35 From studying old pictures of archers, Lars discovered that some historical archers held their arrow on the right side of the bow. This means that the arrow can be fired in one single motion. Both faster and better!

Research and study is what is lacking in testing for many. There is much we can learn from the past, but also from social science, measurement, designing experiments, etc.

1:56 If he wanted to learn to shoot like the master archers of old, he had to unlearn what he had learned…

Learning new stuff, learning how to use heuristics and train real skills, needs testers to unlearn APPLYING techniques.

2:07: When archery was simpler and more natural, exactly like throwing a ball. In essence making archery as simple as possible. It’s harder how to learn to shoot this way, but it gives more options and ultimately it is also more fun.

It is hard to learn and it takes a lot of practice to learn to do stuff in the most efficient en effective way. Context-driven testing sounds difficult, but in essence it makes testing as simple as possible. That means it becomes harder to learn because it removes all the methodical stuff that slows us down. These instrumental approaches trying to put everything in a recipe so it can be applied by people who do not want to practice, make testing slow and ineffective.

2:21 A war archer must have total control over his bow in all situations! He must be able to handle his bow and arrows in a controlled way, under the most varied of circumstances.

Lesson 272 in the book Lessons Learned in Software Testing: “If you can get a black belt in only two weeks, avoid fights”. You have to learn and practice a lot to have total control! That is what we mean by excellent testing: being able to do testing in a controlled way, under the most varied of circumstances. Doesn’t that sound like Rapid Software Testing? RST is the skill of testing any software, any time, under any conditions, such that your work stands up to scrutiny. This is how RST differs from normal software testing.

2:36 … master archers can shoot the bow with both hands. And still hit the target. So he began practicing…

Being able to do the same thing in different ways is a big advantage. Also in testing we should learn to test in as many different ways as possible.

3:15 perhaps more importantly: modern slow archery has led people to believe that war archers only shot at long distances. However, Lars found that they can shoot at any distance. Even up close. This does require the ability to fire fast though.

Modern slow testing has led to believe that professional testers always need test cases. However, some testers found that they could work without heavyweight test documentation and test cases. Even on very complex or critical systems also in a regulated environment. This does require the ability to test fast though.

3:34 In the beginning archers probably drew arrows from quivers or belts. But since then they started holding the arrows in the bow hand. And later in the draw hand. Taking it to this third level. That of holding the arrows in the bow hand, requires immense practice and skill and only professional archers, hunters and so on would have had the time for it. … and the only reason Lars is able to do it, is he has been years of practicing intensely.

3:34 In the beginning archers probably drew arrows from quivers or belts. But since then they started holding the arrows in the bow hand. And later in the draw hand. Taking it to this third level. That of holding the arrows in the bow hand, requires immense practice and skill and only professional archers, hunters and so on would have had the time for it. … and the only reason Lars is able to do it, is he has been years of practicing intensely.

Practice, practice, practice. And this really makes the difference. I hear people say that context-driven is not for everybody. We have to accept that some testing professional only want to work 9 to 5. This makes me mad!

I think professional excellence can and should be for everyone! And sure you need to put a lot of work in it! Compare it to football (or any other good thing you want to be in like solving crossword puzzles, drawing, chess or … archery). It takes a lot of practice to play football in the Premiership or the Champions League. I am convinced that anyone can be a professional football player. But it doesn’t come easily. It demands a lot of effort in learning, drive (intrinsic motivation, passion), the right mindset and choosing the right mentors/teachers. Talent maybe helps, and perhaps you need some talent to be the very best, like Lionel Messie … But dedication, learning and practice will take you a long way. We are professionals! So that subset of testers who do not want to practice and work hard, in football they will soon end up on the bench, won’t get a new contract and soon disappear to the amateurs.

4:06 The hard part is not how to hold the arrows, but learning how to handle them properly. And draw and fire in one single motion not matter what methods is used.

4:06 The hard part is not how to hold the arrows, but learning how to handle them properly. And draw and fire in one single motion not matter what methods is used.

Diversity has been key in context-driven testing for many years. As testers we need to learn how to properly use many different skills, approaches, techniques, heuristics…

4:12 It works in all positions and while in motion…

… so we can use then in all situations even when we are under great pressure, we have to deal with huge complexity, confusion, changes, new insights and half answers.

5:17 While speed is important, hitting the target is essential.

Fast testing is great, doing the right thing, like hitting the target is essential. Context-driven testers know how to analyze and model their context to determine what the problem is that needs to be solved. Knowing the context is essential to do the right things to discover the status of the product and any threats to its value effectively, so that ultimately our clients can make informed decisions about it. Context analysis and modelling are some of the essential skills for testers!

There are probably more parallels to testing. Please let me know if you see any more.

”Many people have accused me of being fake or have theories on how there’s cheating involved. I’ve always found it fascinating how human it is, to want to disbelieve anything that goes against our world view – even when it’s about something as relatively neutral as archery.” (Lars Andersen)

Some context: this blogpost is my topic for a new peer conference called “Board of Agile Testers (BAT)” on Saturday December 19 2014 in Hotel Bergse Bossen in Driebergen.

I love agile and I love hugging… For me an agile way of working is a, not THE, solution to many irritating problems I suffered from in the 90’s and 00’s. Of course people are the determining factor in software development. It is all about people developing (as in research and development) software for people. So people are mighty important! We need to empower people to do awesome work. People work better if they have fun and feel empowered.

Vineet Nayar talks about people, who want to excel, need two important things: a challenge and passion. These factors resemble the ones described by Daniel Pink: autonomy makes room to excel, passion feeds mastery and a challenge gives purpose. I wrote an article about this subject for agile record called “Software development is all about people“. I see agile teams focus on this people stuff like collaboration, working together, social skills… But why do they often forget Mastery in testing?

Rapid Software Testing teaches serious testing skills by empowering testers in a martial art approach to testing. Not by being nice and hug others. By teaching testers serious skills to talk about their work, say what they mean, stand up for excellence. RST teaches that excellent testing starts with the skill set and the mindset of the individual tester. Other things might help, but excellence in testing must centre on the tester.

One of the many examples is in the new “More agile testing” book by Lisa & Janet in chapter 12 Exploratory testing there is a story by Lisa: “Lisa’s story – Spread the testing love: group hugs!” My review comment was and I quote: “I like the activity but do not like the name… I fear some people will not take it too serious… It might get considered too informal or childish. Consider a name like bug hunts.”

Really? Hugs? The whole hugging ethos in agile makes me CRAZY. Again, I love hugging and in my twitter profile it says I am a people lover. But a fluffy approach to agile in general and testing in particular makes me want to SCREAM! It makes me mad! Stop diminishing skills. If people are doing good work, sure hug them, but if they don’t: give them some serious feedback! Work with them to get better and grow. Mentor them, coach them, teach them. But what if they do not improve? Or do not want to improve? Well… maybe then it is time to say goodbye? It is time to start working on some serious skills!

Testing is serious business, already suffering from misunderstanding and underestimation by many who think they can automate all testing and everybody can test. In agile we are all developers and t-shaped people will rule the world. In 15 years there will be only developers doing everything: writing documentation, coding and testing… Yeah right! I wish I could believe that. Testing is HARD and needs a lot of study. As long as I see a vast majority of people not willing to study testing, I know I will have a job as a testing expert for the rest of my life!

This blogpost reflects some “rough ideas”. After the peer conference I will update this post with the ideas discussed in the room.

On Linkedin David Morgan started an interesting discussion titled: “ISO/IEC/IEEE 29119 – why the fear and opprobrium“. In this discussion Cor van Rijn asked me this question:

“@Huib,

your comment gives the impression that you do not believe in standards,

Please enlighten me and let me know what are the DISadvantages to standards.

Personally I am a strong believer in standards, given that they are applied in a matter that is suitable to the environment and the problem (so with regards to complexity, size and risk) and that they should be used as a guideline and that issues that are not relevant should be omitted, due to your consideration and specific situation.

In that respect I would like to refer to the IEEE standards for software test documentation where this idea is phrased explicitly in the text of the standard.“

Much has been said about ISO 29119 the last weeks. For some background, please have a look at the many things said online in my collection of resources on the controversy.

So what is wrong with this ISO 29119 standard?

- The standard is not available publicly. How can I comply to or even discuss a standard that is not publicly available?

- ISO is an commercial organisation. The standard is a form of “rent-seeking“. One form of rent-seeking is using regulations or standards in order to create or manipulate a market for consulting, training, and certification.

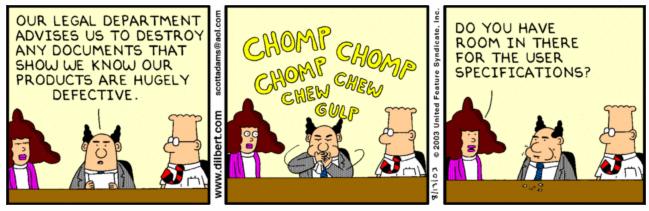

- The standard embodies a document heavy test process which is unnecessary and therefor in many situations waste. Didn’t history show us that documentation and processes are important but that there are more important things we should consider?

- The standard doesn’t speak about the most important thing in testing: skills! The word skill is used 8 times in the first three parts of the standard (270 pages!) and not once it has been made clear WHICH skills are needed. Only that you need to get the right skills to do the job.

- There is much wrong with the content. For instance: the writers don’t understand exploratory testing AT ALL. I wish I could quote the standard, but it is copyrighted. [Edit: it turns out I can quote the standard, so I edited this blog post and added some quotes (in blue) from the ISO 29119 standard, part 1-3]. Here are some examples: (HS is my comments to the quotes)

Example 1: The definition on page 7 in part 1: “exploratory testing experience-based testing in which the tester spontaneously designs and executes tests based on the tester’s existing relevant knowledge, prior exploration of the test item (including the results of previous tests), and heuristic “rules of thumb” regarding common software behaviours and types of failure. Note 1 to entry: Exploratory testing hunts for hidden properties (including hidden behaviours) that, while quite possibly benign by themselves, could interfere with other properties of the software under test, and so constitute a risk that the software will fail.”

HS: Spontaneously? Like magic? Or maybe using skills? There are many, many more heuristics I use while testing. And most important: I miss learning in this definition. Testing is all about learning. ET doesn’t only hunt for hidden properties, it is about learning about the product tested.

2. The advantages and disadvantages of scripted and unscripted testing on page 33 in part 1:

“Disadvantages Unscripted Testing

Tests are not generally repeatable.”

HS: Why are the test not repeatable? I take notes. If needed I can repeat ANY test I do. I think it is not an interesting question if tests are repeatable or not. Although I do not understand the constant pursuit for repeatable tests. To me that is old school thinking. More interesting is to teach testers about reasons to repeat tests.

“The tester must be able to apply a wide variety of test design techniques as required, so more experienced testers are generally more capable of finding defects than less experienced testers.”

HS: Duh! Isn’t that the case in ANY testing?

“Unscripted tests provide little or no record of what test execution was completed. It can thus be difficult to measure the dynamic test execution process, unless tools are used to capture test execution.”

HS: Bullocks! I take notes of what has been tested and I dare to say that my notes are more valuable than a pile of test cases saying passed or failed. It is not about test execution completed, it is about coverage achieved. In my experience exploratory testers are way better in reporting their REAL coverage and tell a good story about their testing. Even if tools are used to capture test execution, how would you measure the execution process? Count the minutes on the video?

3. Test execution on page 37 in part 2:

“8.4.4.1 Execute Test Procedure(s) (TE1) This activity consists of the following tasks:

a) One or more test procedures shall be executed in the prepared test environment.

NOTE 1 The test procedures could have been scripted for automated execution, or could have been recorded in a test specification for manual test execution, or could be executed immediately they are designed as in the case of exploratory testing.

b) The actual results for each test case in the test procedure shall be observed.

c) The actual results shall be recorded.

NOTE 2 This could be in a test tool or manually, as indicated in the test case specification.

NOTE 3 Where exploratory testing is performed, actual results can be observed, and not recorded.”

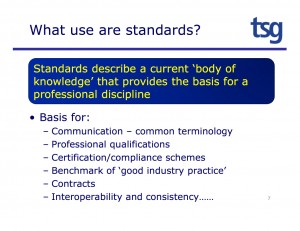

HS: Why should I record every actual result? That’s a lot of work and administration. But wait, if I do exploratory testing, I don’t have to do that? *sigh* - I think there is no need for this standard. I have gone through the arguments used in favour of this standard in the slides of a talk by Stuart Reid (convener of ISO JTC1/SC7 WG26 (Software Testing) developing the new ISO 29119 Software Testing standard) held at SIGIST in 2013 and Belgium Testing Days in 2014:

“Confidence in products”? Sure, with a product standard maybe. But testing is not a product or a manufactory process! “Safety from liability”? So this standard is to cover my ass? Remember that a well designed process badly executed will still result in bad products. Guidelines and no “best practice”? I wish it would, but practice shows that these kind of standards become mandatory and best practice very soon…

Common terminology is dangerous. Read Michael Bolton posts about it here and here. To be able to truly understand each other, we need to ask questions and discuss in depth. Shallow agreement about a definition will result in problems. Professional qualifications and certification schemes? We have those and they didn’t help, did they? Benchmarks of “good industry practice” are context dependant. The purpose of a standard is to describe stuff context-free. So how can a standard be used as a benchmark? Ah! Best practice after all?

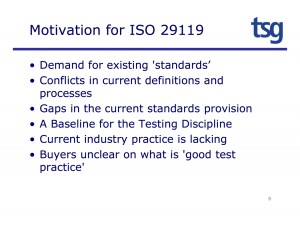

Who is demanding this standard? And please tell me why they want it. There will always be conflicts in definitions and processes. We NEED different processes to do our job well in the many different contexts we work in. A baseline for the testing discipline? Really? Without mentioning any context? What the current industry practice is lacking are skills! We need more excellent testers. The only way to become excellent is to learn, practice and get feedback from mentors and peers. That is how it works. Buyers are unclear what good test practice is? How does that work with selecting a doctor or a professional soccer player? Would you look at their certifications and standards used or is there something else you would do?

Who is demanding this standard? And please tell me why they want it. There will always be conflicts in definitions and processes. We NEED different processes to do our job well in the many different contexts we work in. A baseline for the testing discipline? Really? Without mentioning any context? What the current industry practice is lacking are skills! We need more excellent testers. The only way to become excellent is to learn, practice and get feedback from mentors and peers. That is how it works. Buyers are unclear what good test practice is? How does that work with selecting a doctor or a professional soccer player? Would you look at their certifications and standards used or is there something else you would do?

I do believe in standards. I am very happy that there are standards: mostly standards for products, not processes. Testing is a performance and not a pile of documents and a process you can standardise. I think there is a very different process needed to test a space shuttle, a website and a computer chip producing machine.

I wish that standards would be guidelines, but reality shows standards become mandatory often. This post by Pradeep Soundararajan gives you some examples. That is why I think this standard should be stopped.

Finally, let’s have a look at what the ISO claims on the “http://www.softwaretestingstandard.org/” website:

“ISO/IEC/IEEE 29119 Software Testing is an internationally agreed set of standards for software testing that can be used within any software development life cycle or organisation. By implementing these standards, you will be adopting the only internationally-recognised and agreed standards for software testing, which will provide your organisation with a high-quality approach to testing that can be communicated throughout the world. ”.

Really? I think it is simply not true. First of all, since the petition is signed by hundreds of people from all over the world and over 30 people blogged about it, I guess the standard is not really internationally agreed. And second: how will it provide my (clients) organisation with a high-quality approach? Again: the quality of any approach lies in the skills and the mindset of the people doing the actual work.

I think this standard is wrong and I signed the petition and the manifesto. I urge you to do the same.

This post was edited after Esko Arajärvi told me I can quote text from the standard. ISO is governed by law of Switzerland and their Federal Act of October 9, 1992 on Copyright and Related Rights (status as of January 1, 2011) says in article 25: Quotations. 1 Published works may be quoted if the quotation serves as an explanation, a reference or an illustration, and the extent of the quotation is justified for such purpose. 2 The quotation must be designated as such and the source given. Where the source indicates the name of the author, the name must also be cited.

I talked at TestBash about context-driven testing in agile. I my talk I said that I refuse to do bad work. Adam Knight wrote a great blog post “Knuckling Down” about this: “One of the messages that came up in more than one of the talks during the day, most strongly in Huib Schoots talk on Context Driven in Agile, was the need to stick to the principle of refusing to do bad work. The consequential suggestion was that a tester should leave any position where you are asked to compromise this principle.”

Adam also writes: “What was missing for me in the sentiments presented at TestBash was any suggestion that testers should attempt to tackle the challenges faced on a poor or misguided project before leaving. In the examples I noted from the day there was no suggestion of any effort to resolve the situation, or alter the approach being taken. There was no implication of leaving only ‘if all else fails’. I’d like to see an attitude more around attempting to tackle a bad situation head on rather than looking at moving on as the only option. Of course we should consider moving if a situation in untenable, but I’d like to think that this decision be made only after knuckling down and putting your best effort in to make the best of a bad lot.”

Interesting because I think I said exactly that: “if anything else fails, leave!” But maybe I only thought that and forgot to speak it out loud, I am not sure. Let’s wait for the video that will give us the answer. But in the meanwhile: of course Adam is right and I am happy that he wrote his blog post. Because if I was too firm or too distinct, he gave me a chance to explain. Because looking back, I have done many projects where, if I hadn’t tried to change stuff, I would have left many of them in the first couple of days. There is a lot of bad testing around. So what did I try to say?

Ethics again.

This topic touches very closely to ethics in your work! Refusing to do bad work is an ethical statement. Ethics are very important for me and I hope more testers will recognize that only being ethical will change our craft. Ethics help us decide what is right and what is wrong. Have a look at the ethics James Bach summed up in this blog post “Thoughts Toward The Ethics of Testing“. Nathalie pointed to an article she wrote on ethics in a reply to my last post.

Ethics and integrity go hand in hand. Ethics are the external “rules and laws” and integrity is your internal system of principles that guides your behaviour. Integrity is a choice rather than an obligation and will help you do what is right even if no one is watching.

I refuse to do bad work!

Bad work is any work that is deliberately bad. I think along the lines of restrictions in a context, demands placed on them that they don’t know how to handle. Or even worse: intentionally doing stuff you know can be done better, but it is faster, easier or because others ask you to do it like that. Of course there are novices in the field and they do work that can be done better. I do not call that bad work since they are still learning. Still there is a limit to that as well. If you have been tester for several years and you still do not know how to do more than 3 test techniques without having to look them up, I will call that bad work as well. I expect continuing professional development from everybody in the field. Simply because working in IT (but in any profession) we need to develop ourselves to become better.

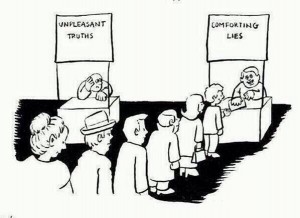

Lying is always bad work. And I have seen many people lie in their work. Lying to managers to get off the hook, making messages sound just a little better by leaving out essential stuff. Also telling people what they what to hear to make them happy is bad work. What do you do when your project manager asks you to change your test report because it will harm his reputation? Or what do you tell the hiring manager in a job interview when he asks you if you are willing to learn? Many people tell that they are very willing to learn, but are they really?

Lying is always bad work. And I have seen many people lie in their work. Lying to managers to get off the hook, making messages sound just a little better by leaving out essential stuff. Also telling people what they what to hear to make them happy is bad work. What do you do when your project manager asks you to change your test report because it will harm his reputation? Or what do you tell the hiring manager in a job interview when he asks you if you are willing to learn? Many people tell that they are very willing to learn, but are they really?

Bad work is claiming things you can’t accomplish: like assuring quality or testing everything. It is also bad work when you do not admit your mistakes and hide them from your colleagues. Bad work is accepting an assignment when you know you do not have the right skills or the right knowledge. In secondment assignments this is an issue sometimes. I have taken on a project once where the customer wanted something I couldn’t deliver but because my boss wanted me on the position I accepted. That was wrong and the assignment didn’t work out. I felt very bad about it: not because I failed, but because I knew upfront I would fail! I won’t do that again, ever.

So how do I handle this?

I push back! Of course I do not run away from a project when I see or smell bad work. I do try to tackle the challenges I am faced with. I use three important ways trying to change the situation: my courage, asking questions and my ethics. Some examples: when a managers start telling me what I should do and explicitly tell me how I should do that, I often ask how much testing experience the manager has. When given the answer I friendly tell him that I am very willing to help him achieve his goals, but that I think I am the expert and I will decide on how I do my work. Surely there is more to it and I need to be able to explain why I want it to be done differently.

I push back! Of course I do not run away from a project when I see or smell bad work. I do try to tackle the challenges I am faced with. I use three important ways trying to change the situation: my courage, asking questions and my ethics. Some examples: when a managers start telling me what I should do and explicitly tell me how I should do that, I often ask how much testing experience the manager has. When given the answer I friendly tell him that I am very willing to help him achieve his goals, but that I think I am the expert and I will decide on how I do my work. Surely there is more to it and I need to be able to explain why I want it to be done differently.

I also ask a lot of questions that start with “why”. Why do you want me to write test cases? What problem will that solve? I found out that often people ask for things like test cases or metrics because it is “common practice” or folklore not because it will serve a certain purpose. Also when I know the reasons behind the requests, it makes it easier to discuss them and to push back. A great example of this is the last blog post “Variable Testers” by James Bach.

Adam talks about changing peoples minds: “One of the most difficult skills I’ve found to learn as a tester is the ability to justify your approach and your reasons for taking it, and being able to argue your case to someone else who has a misguided perspective on what testing does or should involve. Having these discussions, and changing peoples minds, is a big part of what good testing is.”

I fully agree. In my last on blog post “heuristics for recognizing professional testers” my first heuristic was: “They have a paradigm of what testing is and they can explain their approach in any given situation. Professional testers can explain what testing is, what value they add and how they would test in a specific situation.” To become better as testers and to advance our craft, we should train the skills Adam mentions: justify approach and being able to argue our case.

It will make you better and happier!

Jerry Weinberg listed his set of principles in a blog post “A Code of Work Rules for Consultants“. In this blog post he says: “Over the years, I’ve found that people who ask these questions and set those conditions don’t wind up in jobs that make them miserable. Sometimes, when they ask them honestly they leave their present position for something else that makes them happier, even at a lower fee scale. Sometimes, a client manager is outraged at one of these conditions, which is a sure indication of trouble later, if not sooner.”

It will make you a happier person when you know what your limits are and you are able to clearly remind people you work with. It will prevent you from getting into situations that make you miserable. “That’s the way things are” doesn’t exist in my professional vocabulary. There is always something you can do about it. And if the situation you end up in, after you tried the best you can, isn’t satisfying to you: leave! Believe me, it will make you feel good. I have got the t-shirt! And… being clear about your values also will make you better in your work. Maybe not directly, but indirectly it will.

Daniel Pink speaks about the self-determination theory in his book “Drive“. The three keywords in his book are: Autonomy, Mastery and purpose: “human beings have an innate drive to be autonomous, self-determined and connected to one another, and that when that drive is liberated, people achieve more and live richer lives” (source: http://checkside.wordpress.com)

But but but….

Of course I know there is the mortgage and the family to support. Maybe it is easy for me to refuse bad work. Maybe I am lucky to be in the position I am. But think again… Are you really sure you can’t change anything? And if your ethics are violated every day do you resign yourself? Your ethics will act as heuristics signalling you that there is a problem and you need to do something. I didn’t say you have to leave immediately and if you are more patient than I am, maybe you do not have to leave at all… But remember: for people who are good in what they do, who are confident in what they will and will not do and speak up for themselves, there will always be a place to work.

Now you!

Have you even thought about integrity? What are your guiding principles, values or ethics? What would you call bad work? And what will you do next time when somebody asks you something that conflicts with your ethics?

While reading stuff online about (refusing) bad work I ran into this blog post by Cal Newport about being bad at work: “Knowledge Workers are Bad at Working (and Here’s What to Do About It…)“Interesting enough Cal Newport wrote a book called “So Good They Can’t Ignore You: Why Skills Trump Passion in the Quest for Work You Love” about the passion hypothesis in which he questions the validity of the hypothesis that occupational happiness has to match per-existing passion. In several recent talks and blog posts I did I talk about passion. Also in the talk discussed in this blog post I claim that passion is very important and I show a fragment of the Stanford 2005 commencement speech by Steve Jobs. Exactly the passage I showed in my talk, Cal uses in the first chapter of his book. “You’ve got to find what you love. And that is as true for your work as it is for your lovers. Your work is going to fill a large part of your life, and the only way to be truly satisfied is to do what you believe is great work. And the only way to do great work is to love what you do. If you haven’t found it yet, keep looking. Don’t settle. As with all matters of the heart, you’ll know when you find it. And, like any great relationship, it just gets better and better as the years roll on. So keep looking until you find it. Don’t settle.” Anyway. Interesting stuff to be researched. I bought the book and started reading it. To be continued…

Via Twitter Helena Jeret-Mäe asked this question: “What are your criteria for professionalism for testers in CDT community?”. Later via Email she updated her question to: “So the updated version of my question is what are the heuristics for recognizing professional testers in your opinion? I changed “criteria” to heuristics… it’s less categorical. And I’ll leave the term “professionalism” up to you as well – I don’t know exactly what you meant by it.”

In my talk “How to become a great tester” at ContextCopenhagen last January I talked about testers and their skills. I said that most testers don’t know what they’re doing and can’t explain effectively what value they add. I have seen many testers who use the same approach over and over again. If I ask them to name test techniques, they can only name a few. If I ask them to explain techniques to me or show how they work, I get no answers. I find that shocking and I cannot understand why testers who call themselves professionals know so little about their craft and do not study their craft.

That is why I make a distinction between professional testers (of which I think there are only few) and testers by profession. Of course I know and understand that there will always be people who have a 9 to 5 mentality, do not read books or blogs and only want to do courses when the boss pays for them. I accept that reality, but that doesn’t mean I want to work with them!

Let me now answer the questions asked, have done enough ranting for now…

Professionalism is what it means to be a professional and what is expected of them. Professional testing is complex and diverse and has several dimensions: knowledge, skills, experience, attitude, ethics and values. I wrote a blog post “What makes a good tester?” in 2011 on this topic and I have a broader view on this topic now although everything I wrote then still counts. In my earlier blog posts I didn’t mention values and ethics and I now think they are extremely important. James Bach writes about them in his blog post “Thoughts Toward The Ethics of Testing”. A great practical example of ethics and values is Rapid Testing. Look at the “the premises of Rapid Testing” and “the themes of Rapid Testing” both can be found in the slides of Rapid Software Testing.

It is hard to recognize professional testers. Every tester is unique and brings different characteristics to the table. Every project is different too and to be successful in finding the right professional tester for your project different characteristics may be important. There are many characteristics to be considered so to be able to recognize professional testers heuristics can be used. Heuristics are fallible methods for solving a problem or making a decision, shortcuts to reduce complex problem or rules of thumb. They are used to determine good enough feasible solutions for difficult problems within reasonable time. On Wikipedia I found this definition: “Heuristics are experience-based techniques for problem solving, learning, and discovery that give a solution that is not guaranteed to be optimal. Where the exhaustive search is impractical, heuristic methods are used to speed up the process of finding a satisfactory solution via mental shortcuts to ease the cognitive load of making a decision.”

My heuristics for recognizing professional testers:

1) They have a paradigm of what testing is and they can explain their approach in any given situation.

Professional testers can explain what testing is, what value they add and how they would test in a specific situation.

2) They really love what they do and are passionate about their craft.

Testing is difficult and to be successful, testers needs to be persistent in learning and in their work. Passion helps them to be become real professionals. Watch this video where Steve Jobs talks about passion

3) They consider context first and continuously.

To be effective testers need to choose testing objectives, techniques and by looking first to the details of the specific situation. They recognize that there are no best practices but only good practices in a given context.

4) They consider testing as a human activity to solve a complex and difficult problem that requires a lot of skill.

Testers recognize testing is not a technical profession. Testing has many aspects of social science since software is built for humans by humans.

5) They know that software development and testing is a team sport.

Collaboration is the key in becoming more effective and efficient in testing. Software development is a team sport: people, working together, are the most important part of any project’s context. A great tester knows how to work with developers and other stakeholders in any situation.

6) They know that things can be different.

Professional testers use heuristics, practice critical thinking and are empirical. They know that time to test is limited, systems are becoming increasingly complex and thinking of everything is hard. Therefore heuristics are a very helpful “tool” for testers. They also know that biases and logical fallacies can fool them. They practice critical thinking to deal confidently and thoughtfully with difficult and complex situations. Testers have to accept and deal with ambiguity, situational specific results and partial answers.

7) They ask questions before doing anything.

Testing depends on many things and what is the context of the stuff I am looking at? What is the information we need to find? What is the testing mission? Giving a tester an exercise in an interview can easily test this. If he or she starts working on it or gives you an answer without asking questions, this tells you something.

8) They use diversified approaches.

There is no approach or technique that will find all kinds of bugs or fulfil all test goals. Different bugs are found using different techniques. In order to be able to do this, testers need to know many techniques and approaches. This demands training, practice and some more practice.

9) They know that estimation is more like negotiation.

Have a look at some blog post by Michael Bolton:

- Test project estimation rapid way

- Test estimation is really negotiation

- Why is testing taking so long

- Project estimation and black swans

10) They use test cases and test documentation wisely.

The context determines what test documentation you should make and what kind of documentation is useful. Quite recently a great (and long) article by James Bach and Aaron Hodder was published in Testing Trapeze “Test cases are not testing: Toward a culture of test performance”. Also Fiona Charles has written some interesting stuff about test documentation in her article the Breaking the Tyranny of Form.

11) They continuously study their craft seriously, practice a lot and practice “deliberate practice”.

Deliberate practice is a structured activity with the goal of improving performance. According to K. Anders Ericsson, there are four essential components of deliberate practice. It must be intentional, aimed at improving performance, designed for your current skill level, combined with immediate feedback and repetitious.

12) They refuse to do bad work, never fake and have the courage to tell their clients and the people they work about their ethics and values.

Testing is often underestimated and many believe “everybody can test”. I have experienced project managers who tell me how to do my work. Professional testers know how to push back and are able to explain why they do what they do.

13) They are curious and like to learn new things.

Testers find pleasure in finding things out. A good read is the curious behaviour by Guy Mason: “a curious mind is one that could be described as an active, engaged and inquisitive mind. Such a mind frequently seeks out new information, enjoys discovering what there is to discover and enjoys the process that comes along with this goal.“

14) They could have any of the important interpersonal skills mentioned in the list below. As stated earlier it depends on the context which skills are most important.

- Writing skills (like reporting, note taking and concise messages)

- Communication skills (many different like listening, story telling, presentation, saying no, verbal reporting, arguing and negotiating)

- Social and emotional skills (like empathy, inspiring, networking, conflict management and consulting)

- Problem solving skills

- Decision making skills

- Coaching and teaching skills

- Being proactive and assertive

15) They have excellent testing skills:

- Thinking skills (critical, lateral, creative, systems thinking)

- Analytical skills

- Modelling

- Risk analysis

- Planning and estimation

- Applying many test techniques

- Exploring

- Designing experiments

- Observation

Have a look at the Exploratory Testing Dynamics in the RST appendices where several lists of skills are listed.

16) They have sufficient technical skills.

There are many technical skills a tester needs like being able to use tooling, coding skills or willingness to learn what they need to know about the technical structure of the application they are testing. Test automation skills like scripting and SQL skills to work with databases, being able to configure and install software, knowledge and skills to work with the platform the system under test is on (Windows, Linux, Mobile, etc.).

This list is probably not complete. It is very well possible that I have made some mistakes. So please let me know if you have any contributions or improvements. Also: being a professional doesn’t mean you are an expert on all mentioned skills. Have a look at the Dreyfus model to learn more about expert level. A real professional knows what he or she can do and when to ask for help. They do not fear to learn and they are not afraid to make mistakes. I guess that would be the 17th heuristic in the list.

BTW: I googled “code of ethics software testing” and found the ISTQB Code of Ethics for test professionals. I wonder if people who pass the exam know about these AND more important practice them… What about you?

More information

Some more posts that discuss great testers:

- Becoming a world class tester

- What makes a good software tester?

- 10 Things you can do to become a better tester

- What makes a good tester?

- 10 features a perfect software tester should have

And last but not least: have a look at the Testers syllabus by James Bach. An awesome document which lists many important skills and areas of knowledge. It inspired me read about and study different areas.

Wanna know why I am context-driven? Published on the DEWT blog: Why I am context-driven!

“Because testing (and any engineering activity) is a solution to a very difficult problem, it must be tailored to the context of the project, and therefore testing is a human activity that requires a great deal of skill to do well. That’s why we must study it seriously. We must practice our craft. Context-driven testers strive to become the Jedi knights of testing”. James Bach

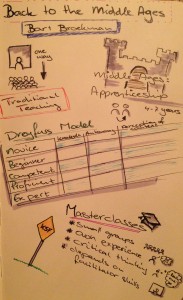

This are my sketchnotes from DEWT4. The central theme of the fourth DEWT peer conference was “Teaching Software Testing”.

Click the images to view them full size.

Recent Comments