Shmuel Gershon’s tweet pointed me to an article on the scrum alliance website Agile Methodology Is Not All About Exploratory Testing by Dele Oluwole. I share Sigge Birgissons concerns: “the post clearly shows what I mean when having deep concerns about the knowledge of testing in agile community”.

I think Dele doesn’t fully understand what testing is or at least he uses a different definition than I do. And certainly he doesn’t understand exploratory testing. Rikard Edgren wrote an open letter to explain what testing is. Please read his high level summary of software testing to understand what testing means to me.

"It is imperative to state in clear terms why Agile testing cannot be all about exploratory testing"

(The text in these gray frames are quotes form the article by Dele)

I wrote a post some weeks ago about agile testing titled what makes agile testing different: agile testing isn’t that much different from “other” testing. Why do some people think agile is so different? To me there is no such thing as Agile testing. There is testing in an agile context. And every agile context is probably different. So saying that agile testing cannot be about exploratory testing makes no sense to me.

It is unequivocally the case that: you cannot estimate your time for exploratory testing, i.e., assign points realistically

Estimating testing is an interesting topic. This blogpost by Morgan Ahlström nicely emphasizes that estimates are guesses. Martin Jansson of the Test Eye writes about “utopic estimations” here. Michael Bolton wrote a lot about estimation here, here and here. He explains that testing is an open-ended task which depends on the quality of the products under test. The decision to ship the product (which includes a decision to stop testing) is a business decision, not a technical one.

Especially the fifth part of the black swan series is interesting in this discussion because Michael writes about the fallacies surrounding “development” and “testing” phases (by James Bach). He also explains why estimating the duration of a “testing project” by counting “test cases” or “test steps” is not a smart thing to do.

"You cannot plan for exploratory testing, as you do not have defined expected results."

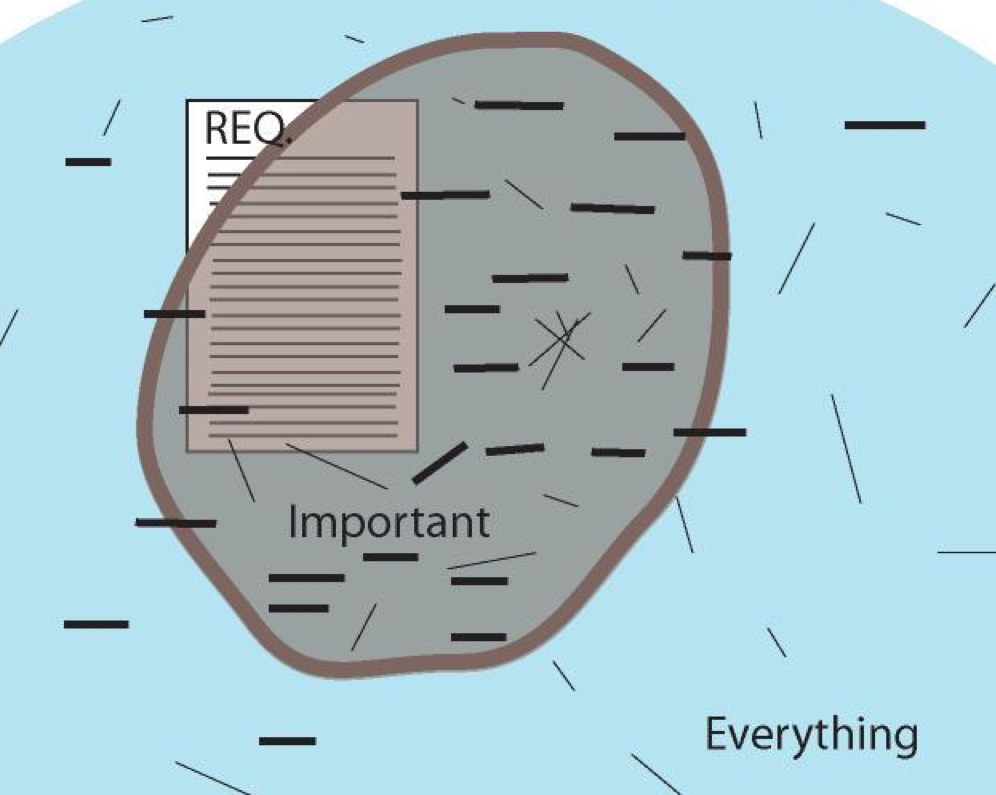

Why are some people so obsessed by expected results? And why is there a need to have expected results to be able to plan testing? Expected results can be very helpful, but there is much more to quality then doing some tests with an expected result. A definition that I like is by Jerry Weinberg: “Quality is value to some person”. To understand this, you might want to read this blogpost to understand that there is more to quality than the absence of bugs. Also have a look at the excellent free ebook “The Little Black Book on Test Design” by Rikard Edgren. On page 2-6 he uses the “testing potato” to explain that there are more important aspects to the system than the requirements only.

Why are some people so obsessed by expected results? And why is there a need to have expected results to be able to plan testing? Expected results can be very helpful, but there is much more to quality then doing some tests with an expected result. A definition that I like is by Jerry Weinberg: “Quality is value to some person”. To understand this, you might want to read this blogpost to understand that there is more to quality than the absence of bugs. Also have a look at the excellent free ebook “The Little Black Book on Test Design” by Rikard Edgren. On page 2-6 he uses the “testing potato” to explain that there are more important aspects to the system than the requirements only.

"There is no defined scope for exploratory testing."

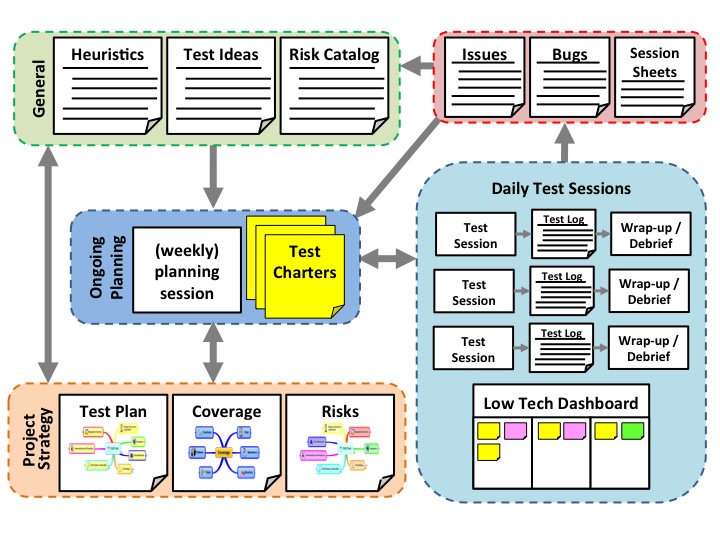

In the exploratory testing I do in my projects there is a “scope”. I do targeted and focused testing using charters, sessions and planning my testing using a dashboard that resembles a scrum board. Have a look at the slides of my presentation “Boosting the testing power with exploration“.

Using a coverage outline in a mind map or a simple spreadsheet, I keep track of what I have tested. My charters (a one to three sentence mission for a testing session) help me focus, my wrap-up and/or debriefings help me determine how good my testing was. My notes and the sessions sheets keep track of what I have done in my testing. Like in scrum, I use “standup” meetings to plan my testing. In these meetings we discuss progress, risks, priorities and charters to be executed. This helps us to make sure we do the best testing we can do continuously.

Using a coverage outline in a mind map or a simple spreadsheet, I keep track of what I have tested. My charters (a one to three sentence mission for a testing session) help me focus, my wrap-up and/or debriefings help me determine how good my testing was. My notes and the sessions sheets keep track of what I have done in my testing. Like in scrum, I use “standup” meetings to plan my testing. In these meetings we discuss progress, risks, priorities and charters to be executed. This helps us to make sure we do the best testing we can do continuously.

"The tester, product owner, and Scrum team are not in control."

I am not sure if I understand what you are trying to point out here. Are you saying that the team is not in control when doing exploratory testing? My model above shows that you can be in control when doing exploratory testing when done right. Exploratory testing is NOT ad hoc or unstructured when done right. If you do it right you will have control!

"There is no measure of progress, as testers cannot determine when testing is enough."

How do we determine how much testing is enough? Stopping heuristics might help here. No matter how simple the system is we are building, there are simply to many variables to test everything. So testing is about making choices what to test and what not to test. Even with a huge amount of automated checks, we can not check/test everything (to understand the difference between testing and checking read this). Testing is not about doing X test cases and when they all pass, you are done. Testing is providing information for managers to make good decisions. And when do managers have enough information to inform their decisions?

Still, not many Agile projects will require just two phases, like integration and regression. But it's definitely not only exploratory testing that's needed, as is erroneously believed in some quarters.

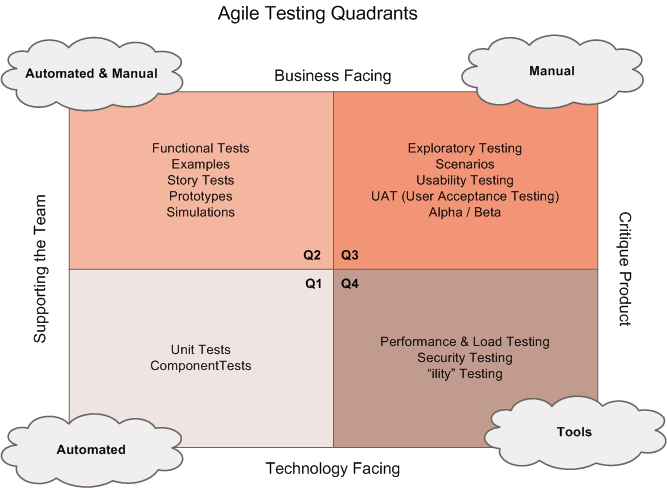

I am not sure what you mean by two phases. What do you mean by testing in phases? I like to use the agile testing quadrants when I try to explain how I think of testing in an agile context.

A team is developing software and the programmers do testing before checking the software in and making it available to the team. How do we call that sort of testing? Unit testing? But is that /only/ testing done by the programmer? I argue that the programmer might do all kinds of testing before checking his code in, even functional and acceptance tests. They probably will create a lot of automated checks and maybe even do some exploratory testing to see if the software meets their expectations: quickly testing some usability and performance aspects. So before the software is checked in, the programmer has covered testing in all 4 quadrants. This does not mean testing is done. More testing can be done, it depends on the context. What does the product owner wants to know? What are the risks involved? How much time do we have left? When discussing a test strategy I try not to speak about phases, I like to discuss what gets covered and why. What information is needed by the team and it’s stakeholders? When talking about coverage I do not mean code coverage but test coverage: the extent to which we have traveled over some map (model) of the system.

So I don’t think you can say “only exploratory testing is needed or not”.

Dele concludes his article with this statement:

It is the responsibility of the tester (and the Agile/Scrum team) to ensure that acceptance testing is in line with the expectation of the product owner. If we agree that there is an expectation, we therefore have to design test cases (even if minimal) that will verify the specified acceptance criteria."

Dear Dele please read about testing and exploratory testing. Some good starting points are these lists of resources on this blog or the one made by Michael Bolton: resources on Exploratory Testing, Metrics, and Other Stuff. I am happy to point you to more good sources of information if you are interested. Just let me know.

Recent Comments