At Agile Testing Days 2019 during the keynote “I can’t do this… alone! A Tale of Two Learning Partners” by Lisi Hocke and Toyer Mamoojee I got inspired by their story about learning pacts. During the keynote Nicole Errante and I started a learning pact too. In our first call we created a plan. One of the books I added to our pact was “7 Rules for Positive, Productive Change – Micro Shifts, Macro Results” by Esther Derby. In this blog post Nicole and I share a summary and our learnings from the book.

Huib: The book is about change and from the first page on it resonated with me. Esther opens with: “People hire me because they want different outcomes and different relationships in their workplaces. My work almost always involves change at some level…” and that is exactly what I do and have been doing for many years now. While reading and discussing the book with Nicole I recognized so many things from my own experience. The introduction talks about change as a social process! Work and life in general is heavily influenced by social processes and everything we do has major social aspects to it. An aspect that unfortunately often is underexposed especially when people want to be in control. Best practices do not work in complex situations in which we find ourselves in IT often, we know that from the work of Dave Snowden and the cynefin framework. This is why I got so inspired by Context-driven testing years ago. Finally I found people who were taking the human aspects in testing and IT seriously. Not trying to approach (testing) problems with mechanistic thinking, not seeing IT as technology centered, not striving for certainty but being okay with uncertainty and a community where human interaction and feelings played a prominent role in solving problems. This book takes the same approach with change. Change is a social thing. Esther’s book hands you the interventions she calls rules to improve and help change to happen. The rules are heuristics or guidelines that will help change to happen.

Huib: The book is about change and from the first page on it resonated with me. Esther opens with: “People hire me because they want different outcomes and different relationships in their workplaces. My work almost always involves change at some level…” and that is exactly what I do and have been doing for many years now. While reading and discussing the book with Nicole I recognized so many things from my own experience. The introduction talks about change as a social process! Work and life in general is heavily influenced by social processes and everything we do has major social aspects to it. An aspect that unfortunately often is underexposed especially when people want to be in control. Best practices do not work in complex situations in which we find ourselves in IT often, we know that from the work of Dave Snowden and the cynefin framework. This is why I got so inspired by Context-driven testing years ago. Finally I found people who were taking the human aspects in testing and IT seriously. Not trying to approach (testing) problems with mechanistic thinking, not seeing IT as technology centered, not striving for certainty but being okay with uncertainty and a community where human interaction and feelings played a prominent role in solving problems. This book takes the same approach with change. Change is a social thing. Esther’s book hands you the interventions she calls rules to improve and help change to happen. The rules are heuristics or guidelines that will help change to happen.

Nicole: Change in life, whether work or personal, is inevitable. The philosopher John Locke said “Things of this world are in so constant a flux, that nothing remains long in the same state.” But we, as humans, tend to be resistant to change; we like stability, routines, and the known. However, maybe we would be less resistant to change if it was implemented in a way that considered this human side of it. That’s one of the things I love about Esther’s book – it constantly keeps people in the focus of the change process. The success of the change is not just about the process but about the people in it. I have been lucky enough to work at the same company for the past 13 years but that means my experience is a bit more narrow than Huib’s when it comes to experiencing change in the workplace. However, a few years ago our company wanted to make the move from a very waterfall-based software development process to one that was more agile. I think most people at my company would agree that the change was more painful and took more time than anyone expected. It is through the lens of that change that I read this book – what could we have done to make the change process better? And what lessons can we learn for future changes?

Summary: The introduction ends with Lessons Learned from a project Esther did. Many of those I recognized and made me want to read the book even more. The lessons are:

- Skill and will aren’t always the problem

- Training is useful and necessary, but it’s not sufficient

- Standardizing nonstandard work may make matters worse

- Long feedback loops delay learning and improvement

- Observed patterns result from many underlying influences

The first chapter deals with change by attraction. If you try to force change upon people, they will react with resistance. Mandating change makes people feel a loss of control and they have no personal buy-in to the change. Esther says “At best, coercion, rewards, and positional authority result in compliance, not engagement…” You don’t want people just going through the motions, you want them actively involved and eager to give things a try. In order for change to happen, things need to be learned and other things need to be unlearned. There is no best practice that works in every situation in knowledge work, quite the opposite: we need to experiment to find out what works and what doesn’t. It is a matter of responding to people, adapting to their needs and attracting and engaging people instead of pushing and persuading.

- Strive for Congruence

Congruence is an alignment of a person’s interior and exterior worlds, balancing the needs and capabilities of self, others, and context. Ignoring other people’s needs and capabilities is probably the most common cause of incongruence. When this incongruence happens, you are in a stress state. When people are stressed, it is hard to think, learn, or engage. You cannot have successful change when learning and engagement are suppressed. Congruence is essential for change by attraction. Congruence contributes to safety, which is essential for people to solve problems, to learn and to speak up about mistakes and things they don’t know. Being empathetic will help you understand where someone else is coming from and what they have to lose by changing, thus avoiding ignoring the context of others in the process of change. Empathy helps people feel safe and understood. Empathy and congruence go hand-in-hand and are essential for making long term changes. At the end of chapter 2, Esther lists a couple of questions you can use to be more congruent.

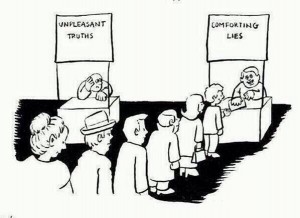

- Honor the past, present and people

When implementing change, it is important to show respect to existing belief systems, the experiences and knowledge people have, and the effort people have made to keep things going with the system currently in place. Build trust and relationships before coaching others. People seldom think that they themselves are wrong. They also may want to improve but most do not want to hear from an outsider that they are doing it wrong. So we have to choose our language with care. Remember that while you have ideas of how things can be better, the people you want to change know things that you don’t know that will be important in this process. By acknowledging and exploring the negative space of change, we prevent unpleasant surprises along the way. Again Esther has a great list of questions to discover what lives in this negative space. People don’t resist change, they respond to its implementation. You can learn from reasons behind the responses to help adapt the change. Use Transformational Communication (inquiry, dialogue, conversation, understanding) instead of Convincing and Persuading (advocacy, debate, argument, defending) to gain openness, trust, and shared understanding. Finally, don’t take for granted what works by only focussing on the problem. Build upon what already works.

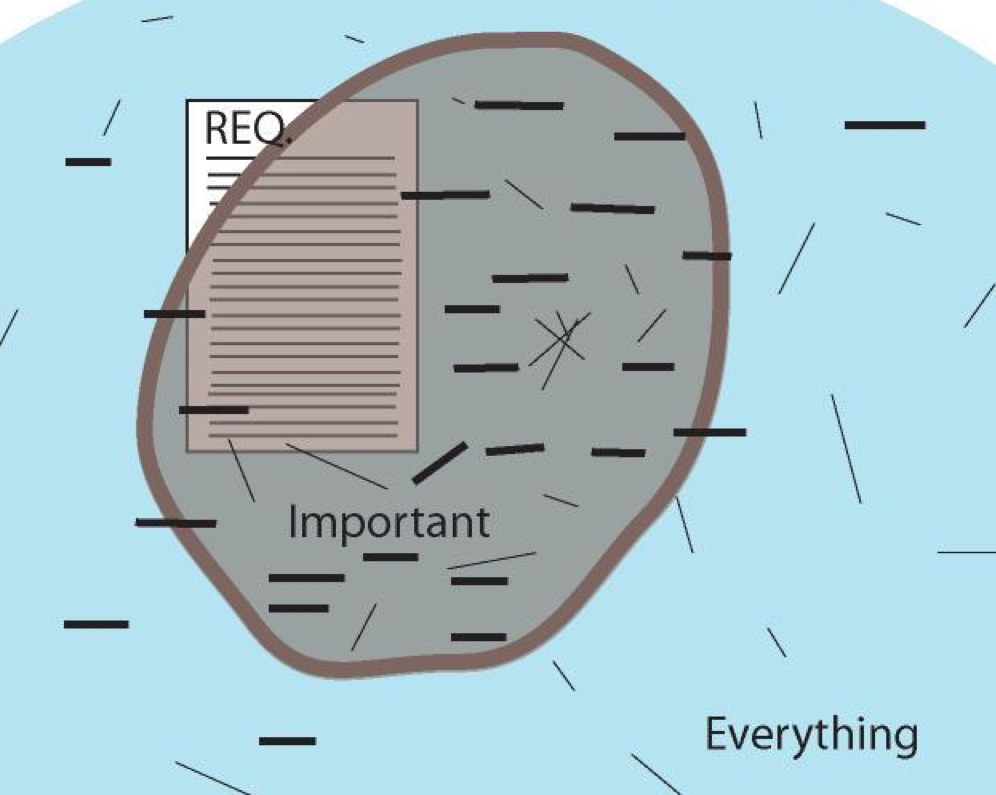

- Assess what is

Every system is perfectly designed to get the result that it does (W. Edwards Deming). Change starts from where you are now and paying attention to the context increases the change problems will get solved. How did the existing conditions in the organization produce the current patterns and results? This chapter introduces 3 techniques intended to look beyond symptoms and find influencing factors:

- Containers, differences, and exchanges (CDE), it describes the three conditions that determine the speed, direction, and path of a system as it self-organizes. This will help discover both the formal structures and invisible structures that factor into the behavior of the organizational system.

- SEEM Model: Steering, Enabling-and-Enhancing, Making. This model shows the different perspectives of people in the organization on the basic set of concerns each company has: how to achieve clarity so people know what to do, what conditions are needed for people to do good work, and what productive constraints will streamline decision making and guide actions and interactions.

- Circle of Influences: to see how the factors influenced one another and where to find virtuous and vicious loops. This method helps to find the many influencing factors that result in problems, instead of focussing too much on the problems itself. Find factors that have influence on several others as possible places to run experiments on.

- Attend to networks

An organisation has a formal and an informal side. Informal social networks within the organisation have great influence and cannot be ignored, although they are not visible on the org chart. The most important social networks for change are those that people turn to and trust for advice. That is why Esther suggests to map the networks within the organisation to be able to use them productively and not to break them inadvertently. You can also enhance existing networks by reducing the number of hops between people that are not directly connected to reduce bottlenecks. Networks can also carry rumors. Esther suggests to capture them to find out what people worry about using a rumor control board. Finally, if people do not want to change something, just do it with other people that do (change by attraction). The resisters will probably follow when they see that other people are doing it. This is the fear of missing out in action.

- Experiment

Solving big complex problems is not easy because many factors are involved and they cannot be solved independently. Trying to solve them in big changes will cause big disruptions and that’s risky. Small experiments foster learning and will engage the people you work with. Experiments are FINE (Fast feedback, Inexpensive, require No permission and are Easy). Landing zones make big changes small by defining intermediate states to which the organisation can evolve. After reaching the landing zone, you can reassess whether your bigger goal is still relevant and course correct as you need. Safe to fail probes are good examples of experiments. Find something that you can try without asking for budget or permission. Don’t worry about failing – keeping the experiment small means any risk should be contained and you can learn from what went wrong. Esther lists a great set of questions to assist in shaping the experiments and another set to test assumptions. Reflecting on what works in the experiments involves double-loop learning.

- Guide and allow for variation

Knowledge work and complex organizations need to allow for variation and emergence to perform effectively. Unnecessary standardization will lead to inefficiency and suboptimal behavior. Coherence is more desirable than consistency and that is why Esther suggests using boundary stories: to help people focus on gaining a similar outcome. Boundary stories give people a guideline on reaching the outcome you want (and avoiding those you don’t) while allowing people to mindfully decide how to get there based on their unique situation. Also change will be evolutionary: small evolutionary steps towards the end goal. Landing zones are useful, so is a horizon map: a thinking tool where you start with the desired outcome and work from right to left filling in conditions and constraints needed for the change to take place. Since change is social, it requires changing habits of thought and cognitive frameworks. Change will happen if we manage to influence metaphors and narratives within the organisation. Explain the outcomes you want and why, then add some boundary stories as a guide to how to get there. This will allow people to refine the change based on their knowledge and experience, thus owning the change rather than being forced into it.

- Use your self

People bring their personalities, characteristics, belief systems, and life experiences to work and this influences what they do and how they do it. This includes you, the person involved in bringing the change. Change is a social process which needs personal connection with the people involved. This works best using empathy, curiosity, patience, and observation. These skills can and must be practiced. A nice list of questions to help prompt empathy is given. Esther also supplies nice overviews with types of questions and how to focus questions to be curious and patient. These questions help to avoid why questions, which often make people defensive. The question and how you ask it, determines the answer you get. Making sense of your observations requires bias awareness and testing your observations. Be generous when trying to interpret the motivation behind what people do and the results they achieve.

Learnings Huib

It was fun to work with Nicole and read the book together talking about two chapters each time we met. Sharing our stories and experiences with change and discussing situations at work helped to understand what the book is about and to get ideas where we could try the things we were reading. The parts on empathy, curiosity and patience really resonated to me. Like I described in my blogpost “Mastering my mindset” I become more and more aware that growth, learning, improving and change needs empathy. I am working on that. This book gave me more tools and inspiration to get there. I already used several questions from the lists in the book and I enjoy using them. The landing zones are a great way to create small steps of change. I’m now working on a horizon map with a scrum master to get insight into what is going on in his team. I cannot wait to work with the team on the map.

Learnings Nicole

I agree with Huib that the method we used to read the book a couple chapters at a time and then discussing really was a fun way to read a book.It really helped reinforce the learnings of each chapter as well. Being able to share what we thought and our experiences helped not only make things clearer but also gave insight on where to apply it to our work. When our organization went through the big agile change, the main reason I thought it went rough was that people didn’t understand the reason behind the change. While that is true, Esther’s book also helped me realize there were other factors involved as well. The change we bit off was too big: we should have started where we were at and done smaller experiments to learn and adjust along the way. People’s knowledge, experience, and feelings should have also been considered in order to get them actively involved in the change. I look forward to being able to apply the lessons in this book to future changes in our organization. I also want to incorporate some of the questions from the book to help work through the day-to-day challenges that we face in our team.

Finally

The book is easy to read and has some great stories in there to illustrate the rules and lessons. It has many valuable and ready to use lists of questions and methods that will help in experimenting with change. Every chapter ends with a great set of takeaways which summarizes what you just read in different wording. We absolutely recommend this book to anybody dealing with change in their work.

Automation comes with a tasty and digestible story: replace messy, complex humanity with reliable, fast, efficient robots! Consider the robot picture. It perfectly summarizes the impressive vision: “Automate the Boring Stuff.” Okay. What does the picture show us?

Automation comes with a tasty and digestible story: replace messy, complex humanity with reliable, fast, efficient robots! Consider the robot picture. It perfectly summarizes the impressive vision: “Automate the Boring Stuff.” Okay. What does the picture show us?

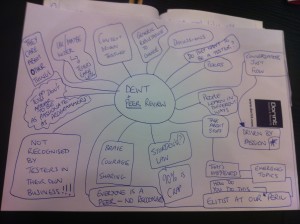

I push back! Of course I do not run away from a project when I see or smell bad work. I do try to tackle the challenges I am faced with. I use three important ways trying to change the situation: my courage, asking questions and my ethics. Some examples: when a managers start telling me what I should do and explicitly tell me how I should do that, I often ask how much testing experience the manager has. When given the answer I friendly tell him that I am very willing to help him achieve his goals, but that I think I am the expert and I will decide on how I do my work. Surely there is more to it and I need to be able to explain why I want it to be done differently.

I push back! Of course I do not run away from a project when I see or smell bad work. I do try to tackle the challenges I am faced with. I use three important ways trying to change the situation: my courage, asking questions and my ethics. Some examples: when a managers start telling me what I should do and explicitly tell me how I should do that, I often ask how much testing experience the manager has. When given the answer I friendly tell him that I am very willing to help him achieve his goals, but that I think I am the expert and I will decide on how I do my work. Surely there is more to it and I need to be able to explain why I want it to be done differently. Why are some people so obsessed by expected results? And why is there a need to have expected results to be able to plan testing? Expected results can be very helpful, but there is much more to quality then doing some tests with an expected result. A definition that I like is by Jerry Weinberg: “Quality is value to some person”. To understand this, you might want to read

Why are some people so obsessed by expected results? And why is there a need to have expected results to be able to plan testing? Expected results can be very helpful, but there is much more to quality then doing some tests with an expected result. A definition that I like is by Jerry Weinberg: “Quality is value to some person”. To understand this, you might want to read  Using a coverage outline in a mind map or a simple spreadsheet, I keep track of what I have tested. My charters (a one to three sentence mission for a testing session) help me focus, my wrap-up and/or debriefings help me determine how good my testing was. My

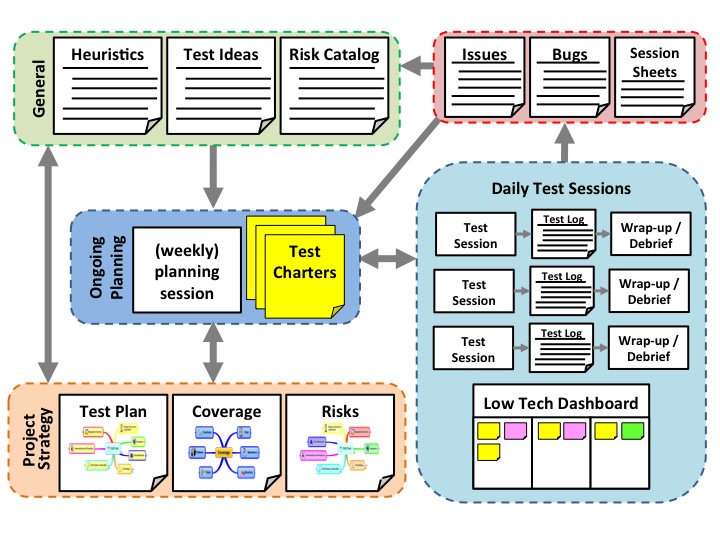

Using a coverage outline in a mind map or a simple spreadsheet, I keep track of what I have tested. My charters (a one to three sentence mission for a testing session) help me focus, my wrap-up and/or debriefings help me determine how good my testing was. My

pares these tasks to the testing activities according to TMap. In this part of his talk he covers the role description, tasks in testing and its importance to the tester. Then he talked about the developments in the testing profession covering developments in approach, the testing craft itself and the systems under test. The latter coincided with the theme of this event “The Cloud”. In the last part of his talk Leon gives his view on the impact of these developments on the role of a test manager. I have summarized them in the table below.

pares these tasks to the testing activities according to TMap. In this part of his talk he covers the role description, tasks in testing and its importance to the tester. Then he talked about the developments in the testing profession covering developments in approach, the testing craft itself and the systems under test. The latter coincided with the theme of this event “The Cloud”. In the last part of his talk Leon gives his view on the impact of these developments on the role of a test manager. I have summarized them in the table below.

Recent Comments