Last week Rik Marselis send me an email pointing me to an article with the title “The adaptability of a perceptive tester”. He added: “Have you read this article? Should appeal to you!”. The article is written by a couple of Dutch (Sogeti) testers. And they, so the introduction tells me, get together once in a while to discuss the profession of testing and quality assurance. This time they discussed some remarkable examples of their experience that perceptive testers (who are aware of the specific situation they’re in) adapt their approach to fit the specific needs.

I replied to Rik with the following email:

| Hey Rik,

Nice article, I had already seen it. But adaptive or perceptive is not context-driven. I also totally disagree with the conclusion: TMap Next contains a number of dogmas (or rather is based on a number of dogmas) like: testing can be scheduled, the number of test cases is predictable, content of a test case is predictable, sequence of process, etc. Regards, |

Rik replied with this email:

| Hi Huib,

We really need to reserve some time to discuss this from different sides because some things that you say I totally disagree with. A conscious tester can handle any situation with TMap. I think whether ET is a technique or approach is really a non-discussion. TMap calls it a technique so you can approach testing in different ways in different situations. And since TMap itself is a method you cannot call ET a method too. I think Context-driven means you choose your approach depending on the situation. Perceptive means conscious, as you are aware of the situation, you can choose an appropriate approach. Well, it is worth discussing. Regards, |

Okay, so let’s discuss!

Exploratory testing

Let’s start with the ET discussion. What does TMap say about this? ET is a test design technique. And the definition of a test design technique (on page 579 of the book “TMap Next for result driven testing”): “a test design technique is a standard method to derive, from a specific test basis, test cases that realise a specific coverage”. Test cases are described on page 583 of the same book: “a test case must therefore contain all of the ingredients to cause that system behaviour and determine wheter or not is it correct … Therefore the following elements must be recognisable in EVERY test case regardless of the test design technique is used: Initial situaion, actions, predicted result”.

Let’s connect the dots: ET is called a test design technique. A test design technique is defined as: a method used to derive test cases. But ET doesn’t use test cases, not in the way TMap defines them. It can, but most of the time it doesn’t… Mmm, an inconsistency with image, a claim, expectations, the product, statues & standards. I would say: a blinking red zero! Or in other words, there /is/ something wrong here!

What is Exploratory Testing? Paul Carvalho wrote an excellent blog post simply titled “What is Exploratory Testing?” on this topic and I suggest people to read this if they what to understand what ET is. Elisabeth Hendrickson says: “Exploratory Testing is simultaneously learning about the system while designing and executing tests, using feedback from the last test to inform the next.”

Michael Bolton and the context-driven school like to define it as: “a style of software testing that emphasizes the personal freedom and responsibility of the individual tester to optimize the quality of his or her work by treating test design, test execution, test interpretation, and test-related learning as mutually supportive activities that continue in parallel throughout the project.”. Michael has a collection of interesting resources about ET and it can be found here.

So Rik, your argument “since TMap itself is a method you cannot call ET a method too” is total bullshit! It sounds to me as “there is only one God…”.

Context-driven testing

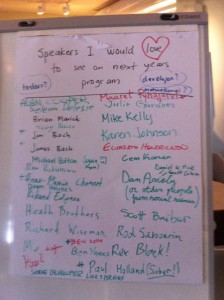

Don’t get me wrong, being adaptive and perceptive is great, but that doesn’t make testing context-driven. A square is a rectangle but a rectangle is not a square! Please have a look at the context-driven testing website by Cem Kaner. Also read the text of the key note “Context-Driven Testing” Michael Bolton gave last year at CAST 2011. In his story you will see that adaptive (paragraph 4.3.4) is only a part of being context-driven. I admit, it is not easy to really comprehend context-driven testing.

Do you think it was TMap Next that was the common success factor in the stories shared in the article… I doubt it!

Recent Comments